HPE MSA - DISCERNING STORAGE ALLOCATION

10/01/21 - storage,msa,san,kb

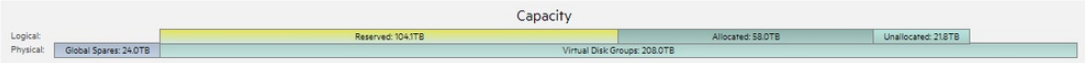

On the main/home screen, right in the middle is a bar which shows overall system Capacity. The top bar is your Logical capacity, and your bottom bar is the Physical capacity.

Simply put, your Physical capacity related directly to how many and what type of drives you have physically installed. If you put all of the disks in a single pool (pools can have multiple groups), then it'll be one single spot for all of your data. If you were doing a performance/archive (gold/silver) setup, you would not have a single disk pool.

Continue Reading -->REPAIRING WITH MDADM

09/01/2021 - mdadm,linux,kb,storage

When adding a new – or replacing an old – drive, sometimes you need to verify what the dev is inside of Linux.

# lsblk

Will give you a breakdown of how each block device is used (or, not). In the example to the right, you can see that /dev/sdg is empty. Before we go about adding it, let’s double-check our array

Continue Reading -->HOW DATA STORAGE MANAGEMENT HAS CHANGED IN 2020

12/26/20 - storage

The pandemic of COVID-19 has caused IT teams to change their resolution of data storage management. Their feedback to the disruption, groups have moved ‘en masse’ to the cloud, migrated storage operations and have deployed virtual desktop environments in response to the situation.

But it is not the same for all storage administrators and engineers, as those dealing with enterprise environments and large masses of end users working from home are putting in a lot of work and effort to migrate to the cloud, many smaller businesses are likely making do with their current setup and perhaps just utilizing a dial-in VPN solution to access onsite resources.

Despite this, the majority of administrators have changed their practices to meet changing requirements for data storage management. Let us look at a few different tendencies that have resulted, and what that may spell out after the pandemic is over.

Continue Reading -->BACKUP TESTING AND ITS IMPORTANCE

12/17/20 - backups

Undermining your backups is to undermine your business. But backing up your data is only a fraction of the big picture for a viable disaster recovery plan. Simply, the purpose of backups is to protect against hardware failures, outages, corruption, acts of nature, and user error.

What a lot of small businesses do not seem to realize is that downtime costs a lot of money. Not only are you potentially not making any income, but you are also still paying for your employees – among other things, such as a botched disaster recovery plan that is not accounted for.

But even is a redundant backup system that provides minimal downtime in the even of total failure, it is still worthless if your backups are bad. Imagine having a complete system failure, and both your onsite and offsite backups for the past 6 months are all corrupt.

Continue Reading -->SSDs on RAID

12/12/20 - storage,kb

RAID arrays have been the foundation of storage systems since the 90’s, ranging from Small businesses to Enterprise environments. Originating from Berkeley in the 80’s, the concept of a RAID allowed to create storage redundancy and striping across multiple disks to allow storage from a single pool, instead of organizing between multiple separate volumes. Often times, creating an array with multiple inexpensive disks will outperform a single, larger disk in a large number of factors.

Not always expressly mentioned, another benefit of an array is an increase of read (and sometimes, write) speeds. Couple this with a larger single volume, and redundancy to protect against physical disk failure, and a RAID is an easy decision.

Continue Reading -->BENEFITS OF USING DESKTOP AS A SERVICE (DaaS)

12/11/20 - desktop,vdi

In today’s climate of telecommuting, along with increasing Security concerns, DaaS is quickly becoming a much more sought after option – as opposed to physical devices for end users. While this does still require an end user to have a physical device to log in to the DaaS with, costs and maintenance can be mitigated on these devices, as their local resources don’t affect the performance of the DaaS environment – just the Internet bandwidth they have access to.

The possibilities of a required device to connect to the DaaS are pretty open – imagine being able to ship out a consumer-level laptop to an end-user that is completely locked down, essentially giving the user a glorified Kiosk with access to Ethernet, Wireless, and a shortcut to their DaaS, and nothing else.

Continue Reading -->WHY SOPHOS XG IS SUBPAR

12/04/20 - review,sophos

Before I begin, let me clarify this is a largely opiniated piece, and I acknowledge I may be biased. Be that as it may, I have 3 years experience with the setup and administration of Sophos XG firewalls, have setup new at least 20, and am a Sophos Certified Architect. I am far from the best engineer, but I do know what I am doing.

So, with that out of the way, where do I begin?

Hardware Performance

Let’s start with the most common issue: the hardware appliance. The hardware is subpar, at best. But how can I substantiate that? The most noticeable is via the WebGUI – sluggish is an understatement for how poorly it can perform on even a 310 series appliance. The appliances I’ve taken apart all have had SSD’s as the storage device, and the DDR3 is at a relatively decent clock speed, so what gives?

Continue Reading -->SOPHOS XG V18 FIREWALL RULES & NAT POLICIES

11/29/20 - firewall,sophos,kb

In XG v18, NAT policies were split from Security(Firewall in XG) rules. You can, however, create a “linked” NAT rule, which will only trigger when traffic passes that specific Security rule. But this won’t be covering that method.

Instead, this will be covering how to setup Firewall/Security and NAT rules on a system which splits those rules. In many firewalls you will come across, Security and NAT rules are kept and created separately.

This also largely covers the theory of firewall rules and NAT policies. While it is sort of brand specific to Sophos XG’s, the concept works across the majority of major firewalls.

Continue Reading -->DFSR AUTHORITATIVE RESTORE

11/24/19 - activedirectory,windows,dfsr,kb,storage

Steps should be ran from the Autoritative DC (generally the PDC Emulator role), unless otherwise noted.

You can verify which server has the PDC Emulator role by running from an elevated command prompt:

DOMAIN RENAME

11/23/19 - activedirectory,windows,kb

Foreword:

- If AD Connect/DirSync is installed, and there is an active sync to Azure AD/Office 365, uninstall it.

- Take some notes of which OUs are actively being synced

- Make sure you have a backup of DNS/ADDS

- While this COULD be done from a DC, or a domain-joined server, it may be easier to do this from a domain-joined workstation – even one which is spun up only for this purpose.

- You will need some of the RSAT tools installed for ADDS, DNS, and GPM

- Wherever you choose to do this, this system will be referenced as the Control System from this point forward